Advanced Audio Deepfake Detection

Cutting-edge machine learning research to identify synthetic voice manipulation and protect against audio fraud

Cutting-edge machine learning research to identify synthetic voice manipulation and protect against audio fraud

State-of-the-art detection capabilities built on rigorous academic research

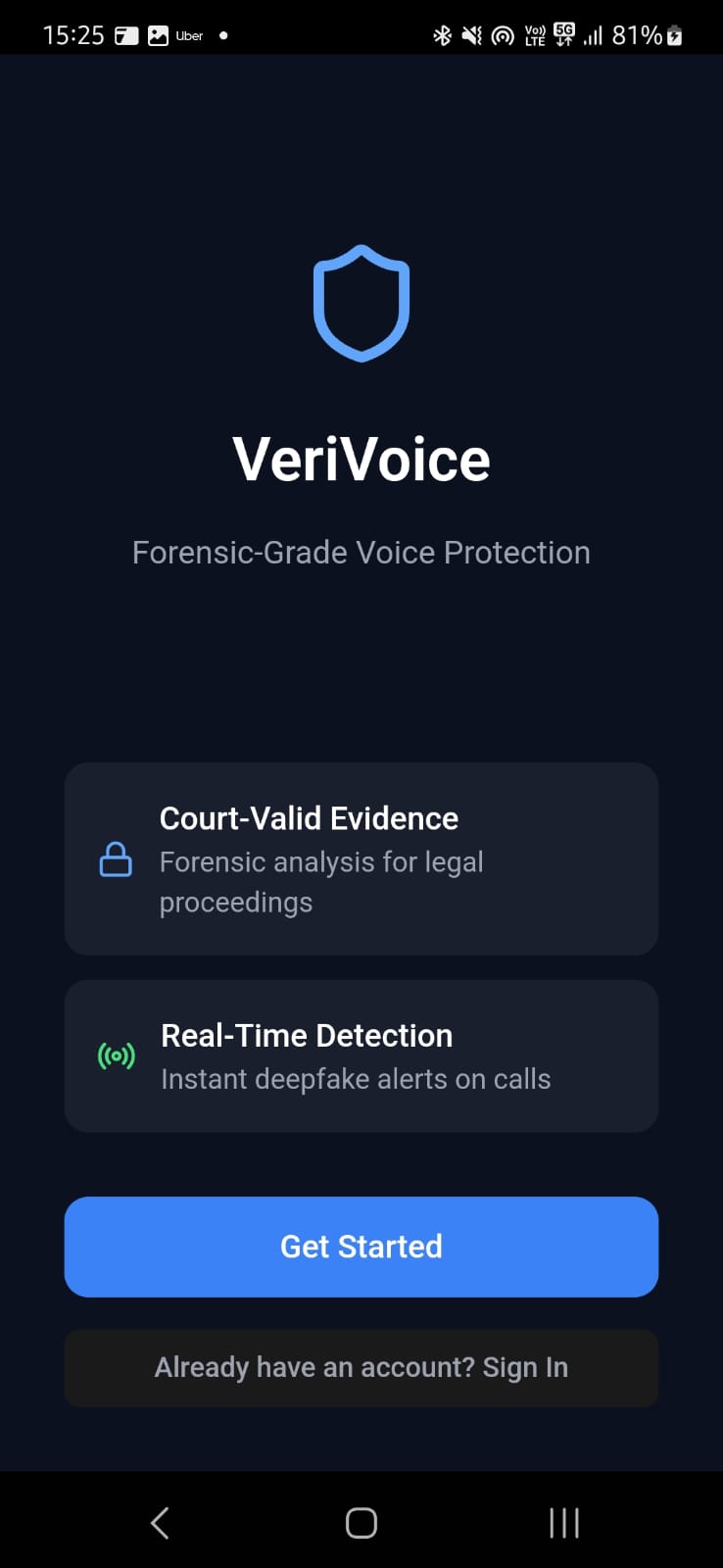

Real-time voice authentication and deepfake detection with forensic-grade accuracy for legal proceedings.

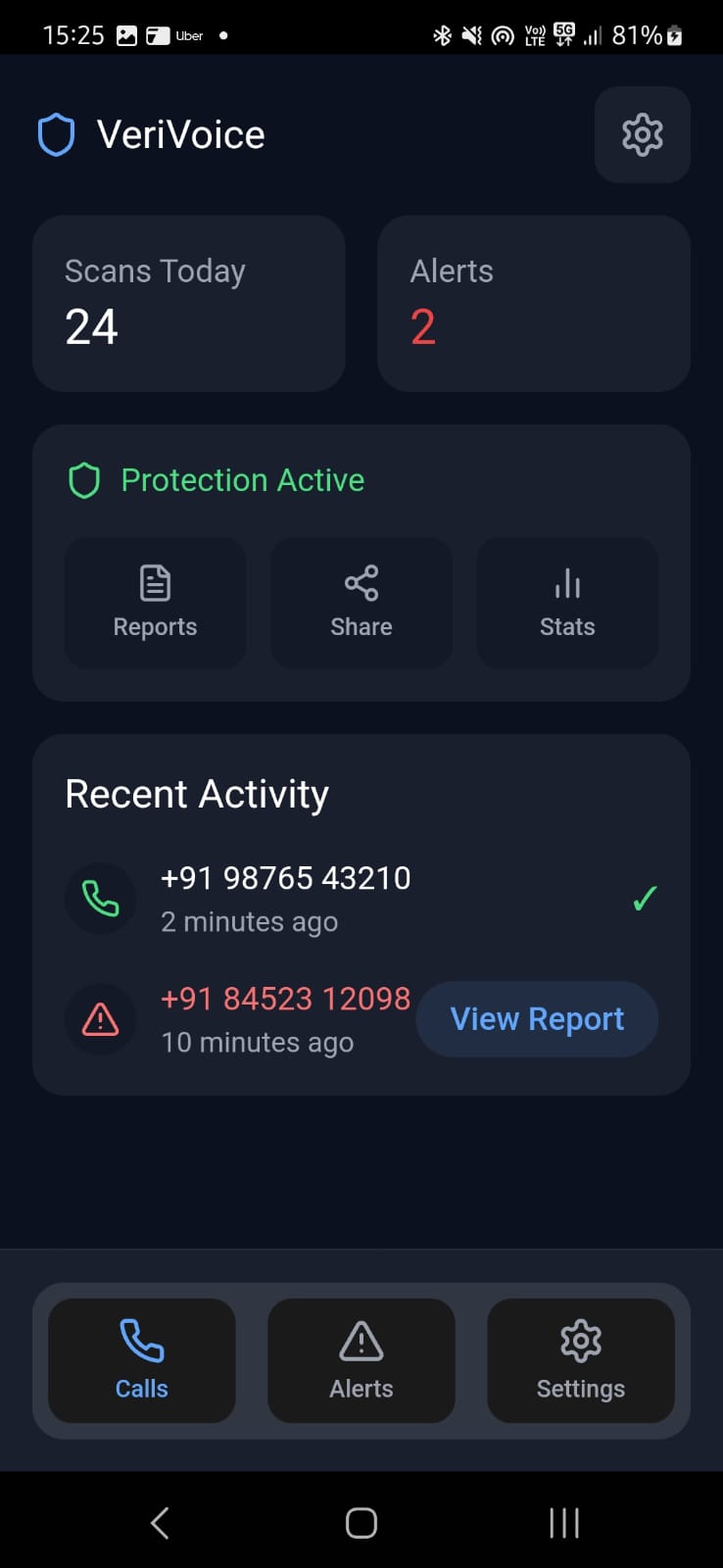

Monitor all voice interactions with instant alerts and comprehensive activity tracking across your organization.

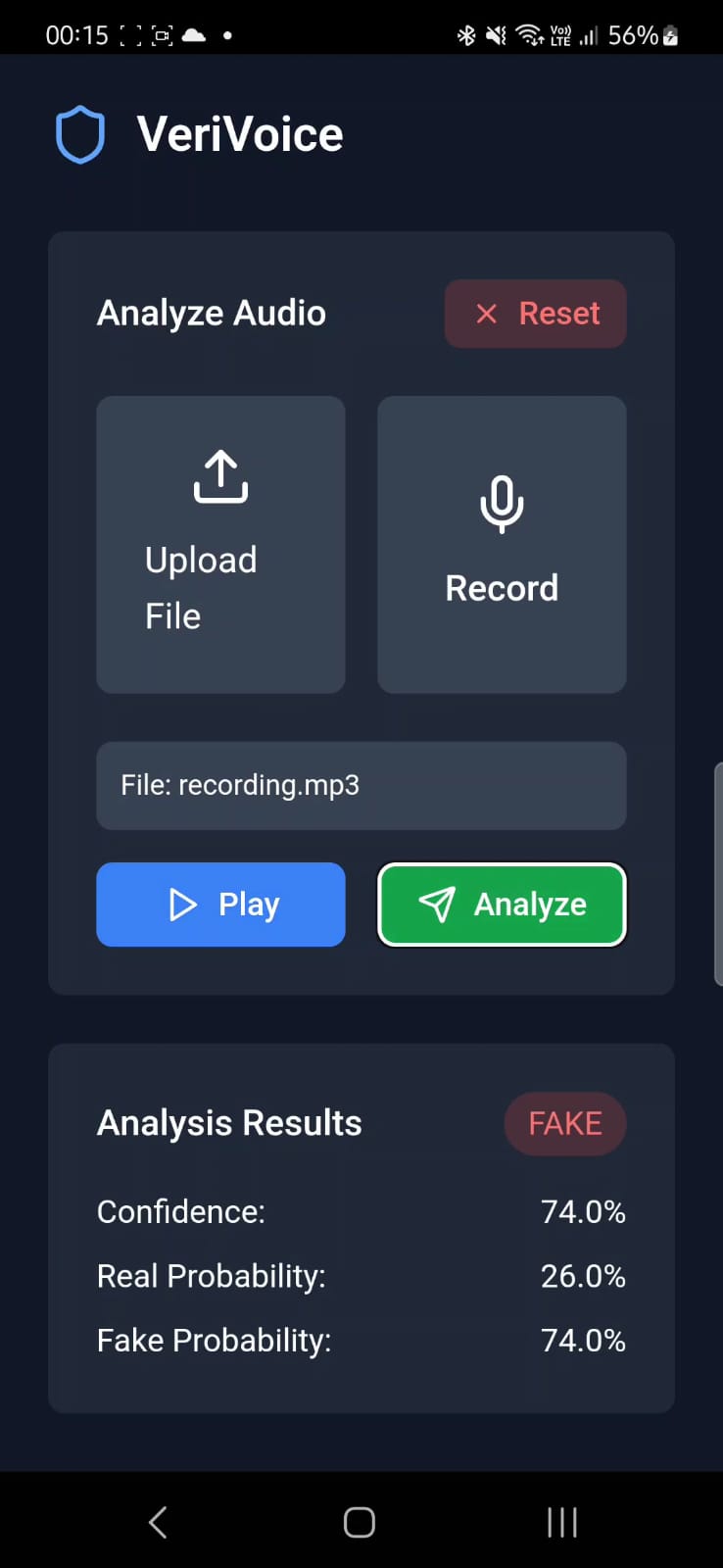

Upload or record audio for detailed multi-model analysis with confidence scores and probability metrics.

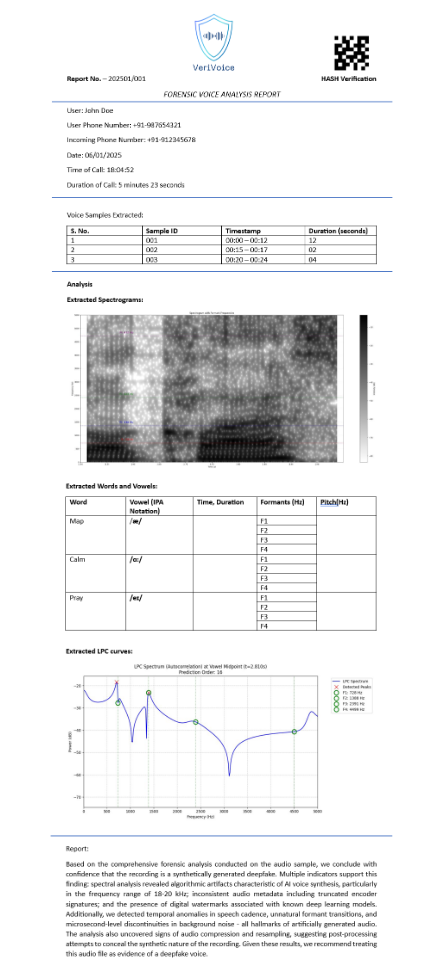

Court-admissible forensic reports with detailed voice analysis, spectrograms, and comprehensive audit trails.

Multi-model ensemble approach for robust deepfake identification

Upload or stream audio file for analysis

Ensemble of specialized detection models process the audio

Comprehensive report with confidence scores

Peer-reviewed research advancing the field of audio deepfake detection

Real-world cases demonstrating the critical need for audio deepfake detection

Scammers gather victims' contact details, names, and other relevant information to make the fake call seem legitimate.

NDTV

The head of the world's biggest advertising group was the target of an elaborate deepfake scam that involved an artificial intelligence voice clone.

Guardian

India News: There is a new scam in town even more sophisticated than fake FedEx packages, job offers or money for liking videos.

The Times of India

A multinational company based in Hong Kong has incurred a colossal loss of $25 million (around Rs 207 crore) due to a sophisticated deepfake scam.

India Today